[ad_1]

A notable scenario in the climate system over the past two years has been the effort to make sense of the latest generation of climate models. In support of the upcoming report of the Intergovernmental Panel on Climate Change (IPCC), global climate models have submitted their simulations to the latest database, known as CMIP6. These submissions showed that updates to a number of models made them more sensitive to greenhouse gases, meaning they project greater amounts of future warming.

In addition to diagnosing the behavior responsible for this change, climatologists have also looked into the implications. Should we be alarmed by the results or are they outliers? Climate models are just one tool among many for estimating the true “climate sensitivity†of the Earth, so their behavior must be seen in the full context of all other evidence.

For a number of reasons, research converges on the idea that high temperature projections are outliers; these hotter models seem to be too much hot. This will present a challenge for scientists working on the next IPCC report: what influence should these outliers have on projections of future warming?

Weighting game

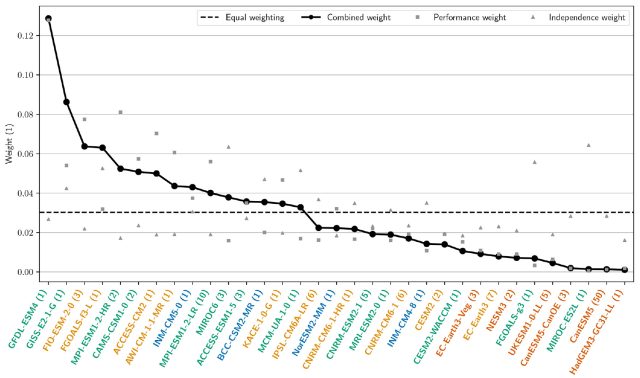

One way to represent the range of uncertainty in projections is to simply average all available model simulations, delimited by error bars indicating the highest and lowest simulation. This is an agnostic solution that does not seek to judge the quality of each model. But another approach is to use a weighted average, scoring each model in some way or another to generate what is, hopefully, a more realistic projection. That way, adding multiple versions of a model that gets drastically different results, for example, wouldn’t unduly change your overall response.

A new study conducted by Lukas Brunner at ETH Zurich uses an established method to weight new model simulations based on their accuracy with the past decades, as well as the proximity of each model.

Although different climate models offer very useful independent verifications, they are not entirely independent. Some models are derived from others, some sharing components and some sharing methods. Dealing with this situation is not as easy as checking the history of a GitHub fork. In this case, the researchers analyze the spatial models of temperature and atmospheric pressure to calculate the similarity between the models. The more similar the two models are, the more methods or codes they are supposed to share, so that each has a little less influence on the overall mean. This process minimizes the effect of double counting models which are not truly independent of each other.

Usually the most important factor for weighting was the ability of the models to recreate the past. Obviously, models must demonstrate their ability to match real-world data before they can trust their projections of the future. All models are tested this way during their development, but they will not end up with identical adjustments in the past, especially since the past is complicated, so that models can be compared based on regional temperatures. , precipitation, atmospheric pressure, etc. to.

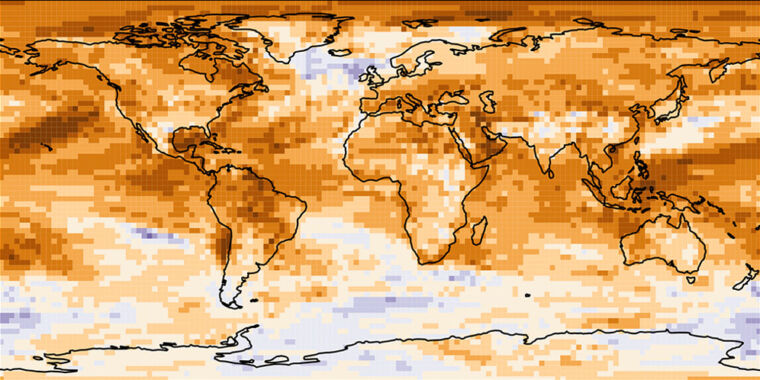

The researchers used five key properties from global datasets spanning the period 1980 to 2014. Each model was rated for how closely it matched temperatures, temperature. tendencies, temperature variability, atmospheric pressures and atmospheric pressure variability.

Don’t be so sensitive

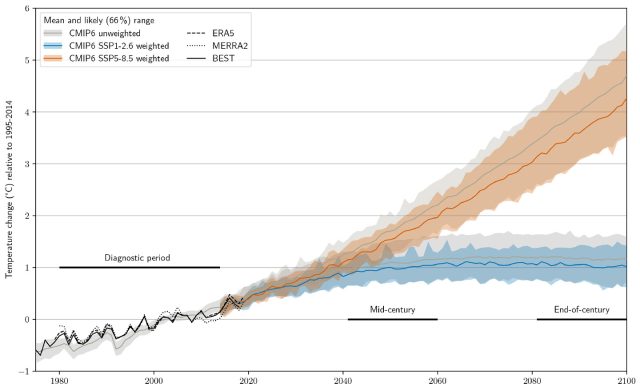

This process ends up underweighting the models with the highest climate sensitivity, as they also do not match past observations. For example, using a sensitivity measure called “Transient Climatic Response”, the average before weighting is 2 ° C, with error bars ranging from 1.6 to 2.5 ° C. But after weighting the models, the average drops slightly to 1.9 ° C, and the error bars narrow to 1.6-2.2 ° C. (This range corresponds fairly well to recent estimates of the true value. )

Apply that to future warming projections and something similar happens. In a high emission scenario, for example, the warming between 2005 and 2090 drops from 4.1 ° C (3.1-4.9 ° C) to 3.7 ° C (3.1-4.6 ° C ). In the low-emission scenario, the predicted mean warming of 1.1 ° C (0.7-1.6 ° C) decreases to 1.0 ° C (0.7-1.4 ° C) after weighting.

The result here is that there is no indication that the state of scientific knowledge has changed. As a result of ongoing development, especially attempts to improve the realism of simulated clouds, some models have become more sensitive to increases in greenhouse gases, so that they project greater future warming. But that doesn’t seem to have made it better representations of Earth’s climate as a whole. Rather than worrying that the physics of climate change are even worse than we thought, we can stay focused on the urgent need to eliminate greenhouse gas emissions.

Earth System Dynamics, 2020. DOI: 10.5194 / esd-11-995-2020 (About DOIs).

[ad_2]